Table of Contents

Introduction: DeepSeek’s Mixture of Experts Architecture

In the rapidly evolving field of artificial intelligence, the quest for efficient and cost-effective solutions has led to the development of innovative architectures. DeepSeek’s Mixture-of-Experts (MoE) architecture stands out as a groundbreaking approach that significantly reduces AI costs while maintaining high performance. By leveraging specialized expert models, MoE minimizes computational overhead, optimizes resource utilization, and achieves a remarkable 90% reduction in AI deployment costs. This article explores the mechanisms behind this cost-efficiency, the economic impact, and the broader implications for the AI industry.

How DeepSeek’s Mixture-of-Experts Architecture Reduces AI Costs by 90%: An Overview

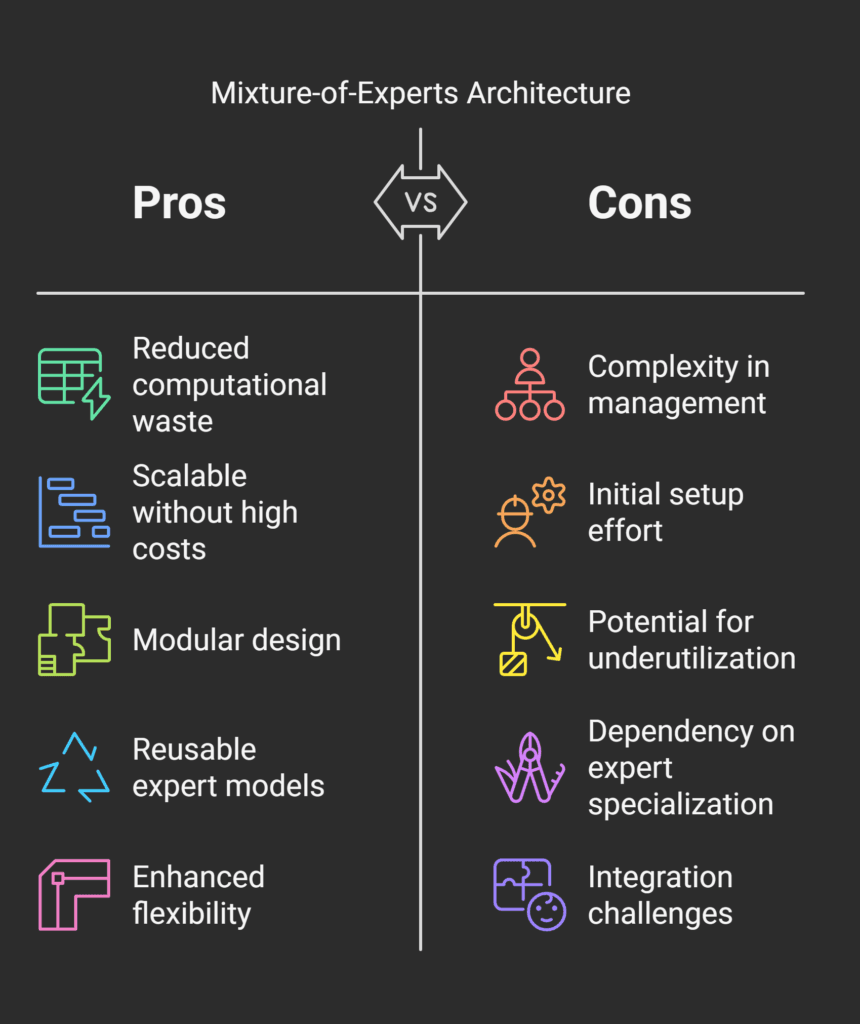

DeepSeek’s Mixture-of-Experts architecture is designed to address the inefficiencies of traditional large-scale AI models. Instead of relying on a single massive model to handle all tasks, MoE employs a collection of specialized expert models, each optimized for specific inputs or tasks. This approach ensures that only the most relevant experts are activated during inference, significantly reducing computational waste. By dynamically routing inputs to the appropriate experts, MoE achieves superior efficiency compared to dense, monolithic models.

The core innovation of MoE lies in its ability to scale efficiently without proportional increases in computational costs. Unlike conventional models that require massive hardware investments to handle growing workloads, MoE scales by adding more specialized experts, each contributing to the overall model’s capacity without overwhelming the system. This modular design not only reduces the financial burden of deploying AI but also enhances model flexibility and adaptability.

The economic benefits of MoE are further amplified by its ability to reuse and combine expert models across multiple tasks. This reduces the need for redundant training and deployment, enabling organizations to maximize their AI investments. With DeepSeek’s MoE, the AI industry is moving closer to a future where high-performance models are both accessible and affordable.

Scaling Efficiency: How MoE Minimizes Computational Overhead

One of the primary advantages of DeepSeek’s MoE architecture is its ability to minimize computational overhead. In traditional AI models, every layer and neuron is active during inference, regardless of the input. This results in unnecessary computations and wasted resources. In contrast, MoE dynamically activates only the experts relevant to the input, significantly reducing the number of computations required.

The routing mechanism in MoE plays a critical role in achieving this efficiency. By analyzing the input and directing it to the most suitable experts, the system avoids processing irrelevant data through the entire model. This selective activation not only speeds up inference but also lowers energy consumption, making MoE more environmentally friendly. The reduction in computational overhead directly translates to lower operational costs for organizations deploying AI systems.

Furthermore, MoE’s scalable design allows it to handle increasingly complex tasks without the exponential cost growth associated with traditional models. As the number of experts grows, the system can tackle larger and more diverse workloads, but the computational resources required for each task remain proportional to the size of the input. This linear scaling ensures that cost-efficiency is maintained even as the model’s capabilities expand.

Optimizing Resource Utilization in Expert Networks

DeepSeek’s MoE architecture optimizes resource utilization by ensuring that each expert model is specialized and only activated when necessary. This specialization enables experts to achieve higher accuracy and efficiency for their assigned tasks compared to general-purpose models. By focusing on specific inputs, experts can be trained more effectively, leading to faster convergence and better performance.

Another key aspect of MoE is its sparse activation pattern. During inference, only a small subset of experts is engaged, while the rest remain inactive. This sparse utilization of resources reduces memory usage and computational demands, making MoE particularly suitable for deployment on edge devices or in resource-constrained environments. The ability to leverage sparse computation also enables parallel processing, further improving the system’s overall efficiency.

The optimization of resource utilization in MoE extends to training as well. Since each expert is trained independently and only on relevant data, the overall training process becomes more efficient. This decentralized training approach reduces the need for massive batch sizes and allows for more effective use of distributed computing resources. By aligning resource utilization with task requirements, MoE achieves unprecedented levels of efficiency in both training and deployment.

Economic Impact: Achieving 90% Cost Reduction in AI Deployments

DeepSeek’s MoE architecture delivers substantial economic benefits, enabling organizations to reduce AI deployment costs by up to 90%. These savings are primarily attributable to lower hardware requirements, reduced energy consumption, and decreased operational expenses. By optimizing resource utilization and eliminating unnecessary computational overhead, MoE allows businesses to implement high-performance AI solutions at significantly lower costs.

The cost reduction also extends to the training phase. With MoE, organizations can train expert models independently and reuse them across multiple tasks, reducing the need for redundant training processes. This modular approach not only lowers training costs but also accelerates the development cycle, enabling faster time-to-market for AI-powered products and services.

The broader economic implications of MoE are equally transformative. By making AI more affordable and accessible, DeepSeek’s architecture is democratizing access to advanced AI capabilities. This cost reduction is particularly beneficial for small and medium-sized businesses, which can now compete with larger enterprises in the AI space. As MoE continues to gain adoption, it promises to drive innovation and economic growth across industries.

DeepSeek’s Mixture-of-Experts architecture represents a paradigm shift in AI design, offering a highly efficient and cost-effective alternative to traditional models. By minimizing computational overhead, optimizing resource utilization, and achieving a 90% reduction in deployment costs, MoE is reshaping the economics of AI. As organizations increasingly adopt this architecture, the AI industry is poised to become more accessible, sustainable, and innovative, unlocking new possibilities for businesses and societies worldwide.

1. What is Mixture of Experts (MoE) architecture?

Mixture of Experts (MoE) is a neural network architecture where instead of using one large, dense network, multiple smaller “expert” networks are used. For each input, only a subset of these experts is activated, determined by a “gating network.” This selective activation allows for a larger overall model capacity without increasing computational cost for every input.

2. How does DeepSeek utilize MoE architecture?

DeepSeek utilizes MoE in its language models to achieve high performance with reduced computational demands. By employing MoE, DeepSeek models can have a massive number of parameters, but only a fraction of these parameters are actively engaged for any given input, leading to efficiency gains.

3. How does MoE architecture reduce AI costs?

MoE reduces AI costs primarily by decreasing the computational resources needed for inference and training. Since only a subset of experts is active at any time, less computation is required compared to dense models of similar size. This translates to lower energy consumption, faster processing, and reduced infrastructure costs.

4. What kind of cost reduction does DeepSeek claim with MoE?

DeepSeek claims that their MoE architecture can reduce AI costs by up to 90% compared to traditional dense architectures for models with comparable performance. This significant reduction makes advanced AI more accessible and sustainable.

5. Is the 90% cost reduction applicable to both training and inference?

While MoE architectures primarily shine in reducing inference costs, they can also offer training efficiencies. The 90% figure likely refers to the potential reduction in inference costs, which is a major factor in the overall operational expenses of large AI models. Training cost reduction might also be significant but could vary depending on implementation.

6. Does MoE affect the performance of AI models?

No, when implemented correctly, MoE can actually enhance model performance. By having specialized experts, the model can learn more complex patterns and achieve higher accuracy compared to a single dense network with the same computational budget. DeepSeek emphasizes that their MoE models maintain or improve performance while reducing costs.

7. What are the main components of DeepSeek’s MoE architecture?

DeepSeek’s MoE architecture, like typical MoE models, includes expert networks and a gating network. The gating network intelligently routes each input to the most relevant experts. The specific details of DeepSeek’s implementation, such as the number of experts and the gating mechanism, are likely proprietary.

Comments are closed.